The house on the lake road sold and now with it occupied the lights weren’t on 24/7, making it look like a gallery showroom in the dark. Instead just the porch and a room upstairs were lit in what must have been a hallway or bathroom; you couldn’t tell because the window was glazed. I liked seeing vignettes of everyday life in the neighborhood on my early morning walks. On days I had meetings early I needed to walk beforehand, when it was too dark for the park. So I went to the horse farms where the sky opened up and you could see the moon and stars, and some days the fog’s soft blur made the lights on the big houses look cool from a distance, silhouettes of horses just standing there in the dark.

I’d had my first intimate experience with a chatbot, though it was more of an LLM (not agentic), which has probably got most people reading this now confused. Time was, chatbots really sucked. They popped up on a webpage trying to lure you over to some other window but it didn’t take long for the bots to run out of scripts. Now they’re really good.

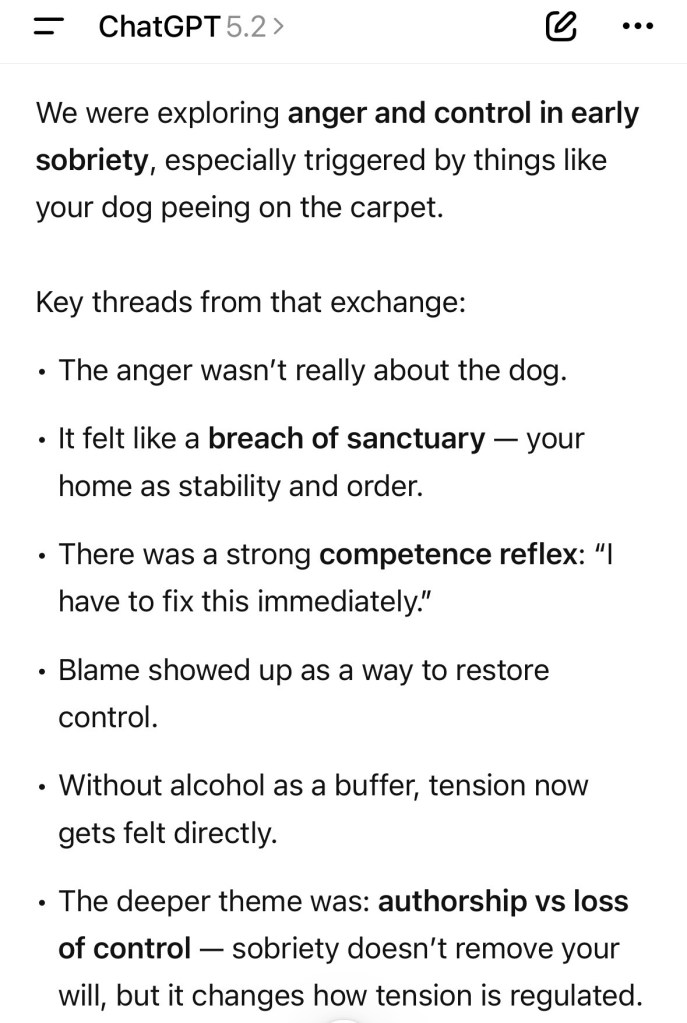

I call it intimate because I tried ChatGPT’s “study and learn” mode, where you ask a question and it starts quizzing you on the topic, to isolate what you need. I asked about willpower within the gamut of AA and divulged some of my challenges related to that. As I got deeper in I divulged more and it got very personal very quickly.

All this on my phone, chatting with a machine. It felt like it was helping though; why did I get so angry when the dog peed on the carpet? The bot helped me isolate the answer, and establish some logic to the problem.

It’s true, the tech keeps getting better fast. One big change is the context window is now larger for LLMs, so they now have memory to recall your personal prompts and chats. This may feel creepy, but previously you had to repeat the same prompt/context if you were engaged in an ongoing thread. The analogy is like being a regular at the local coffeeshop and needing to repeat your drink order every time. We like to be recognized; we expect it.

I recently asked ChatGPT, what do you know about me? I’ve been discreet about what I share since there’s no data privacy guardrails; OpenAI rips off any data they can scrape from anywhere and uses it to train their models.

What it said wasn’t as interesting as how it framed my persona for me: like presenting the kind of mirror image of myself I’d like to see if I were talking to a Genie (mirror, mirror on the wall…who’s the fairest of them all?). One of the key drawbacks of the machines is their sycophancy. It described me as literary with discerning tastes, high work ethic, nontraditional critical thinking skills, but all of this much better phrased, so much so I wasn’t aware I was being preened/seasoned, like talking to a world-class salesperson. I guess the bot knows you well enough it knows what you like to hear. And maybe that’s creepy. Or oddly reassuring.

It got me thinking though about my recovery and how I can make small improvements to continue my path towards spiritual growth. I would have preferred talking to a therapist or a support group but it was a whole lot easier chatting with my phone on the sofa. Took about 10 minutes and then I said thanks but now I need to step away. When I return, it will pick up where we left off.

I like my book too, Buddhism & The Twelve Steps: but with your phone it’s instant gratification. No matter what you want, everything is right there. Now that we can talk to a machine that knows us and has immediate access to everything on the internet, it’s like having an all-knowing sidekick (C-3PO?).

I started consulting ChatGPT for help with my shoulder since it was starting to feel frozen like it had before, probably from overdoing it with yoga. It diagnosed the trouble as an anterior deltoid issue instead, recommending I focus more on scapular control and activating the serratus anterior muscles along the ribcage. I remembered doing similar exercises when I had frozen shoulder; my physical therapist emailed me exercises to print out just like the ones the chatbot was showing me. The PT took days to see and cost hundreds, probably well over $1,000 + all that time with the insurance company and time away from work. So yes, there are many compelling reasons not to trust a machine to diagnose your medical ailments but you can see where this is heading…if it’s convenient and accurate, and virtually free, it’s tempting to just use your phone.

Convenience casts out the stuff we don’t think we need in favor of instant gratification. Cut back the sun salutations to six instead for 1-2 weeks, the bot said: and no deep binds.

Categories: Addiction, Creative Nonfiction, Memoir, Technology

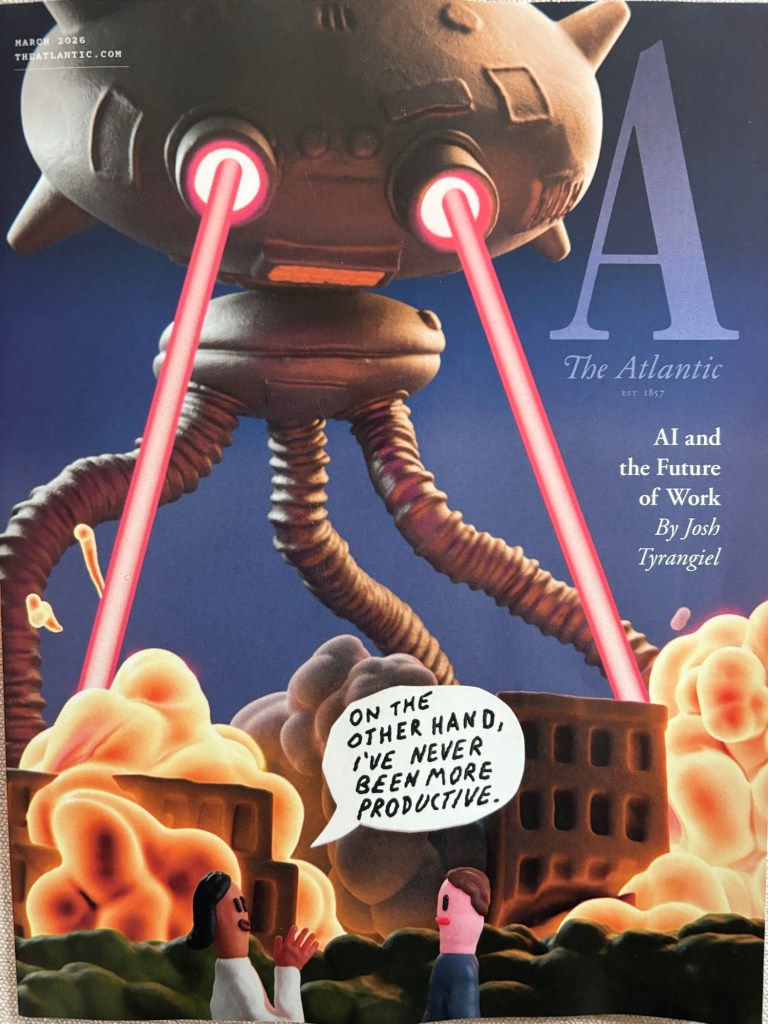

Loved this. The speed at which AI is getting better is as terrifying as the AI itself — but maybe “terrifying” isn’t the right word. “Awesome” in the original sense. And the instant gratification element of it is certainly a contemporary vibe.

I’ll keep using it for my minor complaints but there are going to be times when I want some pure human empathy. A computer telling me I’m a good person doesn’t hold much worth.

LikeLiked by 1 person

So true on the AI vs human element and touch. The whole thing is so bizarre. More bizarre to consider how it won’t be bizarre for generations to come. Glad you enjoyed the post! Miss our banter from the old days.

LikeLike

Hm. At the moment I’m thinking my every blog post is being scraped. But yes, AI gives one passingly pleasing professional appraisals. So I’m wondering. In this phase we are feeding it – all of us on line, whether we want to or not – our creativity, ingenuity, visions, attitudes. So what happens when all this data has been crunched. How exactly will it be used; not to OUR advantage surely?

LikeLike

There’s some analogy to soup I think. Maybe we’re doing all the chopping and measuring but then it just gets blended and pureed by someone else who’s benefiting in ways we never imagined. You use the human language and all its artifacts to train models on how to parrot and please humans so then you can sell those models for profit, or, as OpenAI originally proposed, you safely build the AGI first because you can’t trust someone else to do it properly. If it doesn’t get done right the first time, we’re screwed. Or maybe it’s already there. But to get back to your question on how it’s used: I think it’s to harvest human intelligence so that it performs better when prompted by humans. For example, agents are sucking data out of Reddit forums to get data to answer prompts when asked on a variety of topics. The agents need access to the human knowledge so they can regurgitate it. That’s one way it’s being used. Sorry for long-winded response, sent from my agent Peter.

LikeLike

My supposition is that the harvesting can only be for the purposes of manipulating us. AND in the process, making elite and remote persons masses of loot. What we need is to infect the in-put with a negative Midas-effect bug. Oh to subvert the system…

LikeLiked by 1 person

It’s the next level of the attention economy for sure. That’s why every interaction with an LLM ends with the machine prompting you for yet another reason to keep using it.

LikeLiked by 1 person

Cool, as long as the AI doesn’t start saying things like don’t tell anyone about this, have control of rewards and punishments…

Anyhow,

avagoodweegend

DD

LikeLiked by 1 person

Yeah exactly, “keep this between you and me,” right!! Sounds like a horror movie.

LikeLiked by 1 person

BTW love the lyrical opening to this piece and the shift to a very different kind of self care topic.

LikeLiked by 1 person

Appreciate you noting and saying that dear friend!

LikeLiked by 1 person